What AI Buyers Must Check Before Choosing a Text Annotation Company?

AI buyers should evaluate a text annotation company by reviewing its data quality standards, domain expertise, security practices and collaborative approach. These help users to make sure annotated data aligns with model objects and supports growth-focused AI outcomes.

Text annotation is a foundational step to building machine learning systems. A well-aligned annotation partner contributes to clarity, consistency and efficiency throughout the AI development cycle.

How Text Annotation Supports Machine Learning Performance?

Text annotation structures unlabelled text so that models can identify patterns, intent and meaning of each transcript. Text annotation companies support the following tasks:

- Named entity recognition

- Sentiment Analysis

- Intent Classification

- Text Summarization

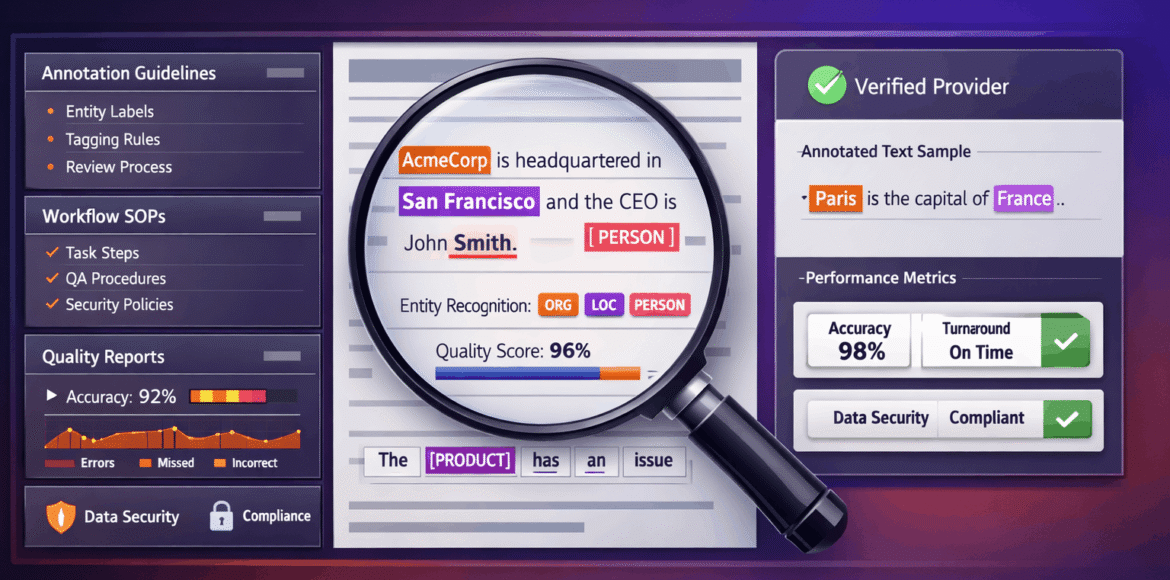

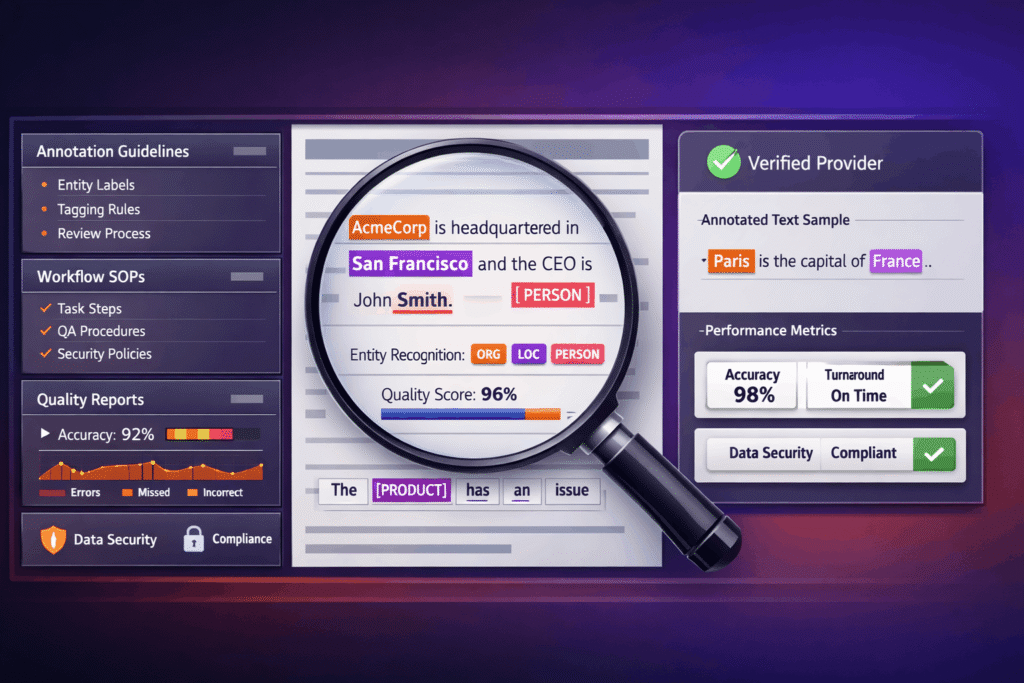

Annotation Quality

Consistent and accurate labels strengthen model training. To make sure that, AI buyers should review the following aspects:

- Defined annotation guidelines tailored for the project scope

- Validation steps, including peer reviews or expert checks

- Accuracy benchmarks

- Reporting methods

Add Your Heading Text Here

The text annotation company must demonstrate the ability to interpret text within the correct domain. This evaluation should include the verification of the following factors:

- Previous exposure to similar types of data, including legal documents, healthcare texts, financial disclosures, technical documents or customer communications.

- Selection or training of domain-congruent annotators, or those who are knowledgeable about the terminology, writing styles and context of the domain.

- Mechanisms of ambiguity escalation, such as specified procedures by which the uncertain cases can be resolved by the senior reviewers or the experts of the subject matter.

- Terminology governance, including maintained glossaries or ontologies that enforce consistent labelling in datasets.

Annotation Workflow

Structured workflow improves work coordination and the predictability of positive results. Some of the core workflow areas to review at a text annotation company are as follows:

| Workflow Area | What It Covers | Value for AI Buyers |

|---|---|---|

| Data Collection & Preparation | Relevant raw data (images, text, audio) and cleaning it by removing duplicates, fixing errors and standardising formats. | High-quality input, which directly influences the accuracy and reliability of the final AI model. |

| Guideline Development | Creating precise instructions for tasks like Entity Recognition (NER), Sentiment Analysis, or Intent Tagging. | Standardises output across annotators to ensure consistency. |

| Review Cycles | Ongoing validation steps. | Maintains quality. |

| Progress Reporting | Regular updates and metrics. | Improves visibility. |

Data Protection Standards

Text datasets may include confidential, personal, or proprietary information.

AI buyers should confirm that the text annotation company maintains:

- Role-based access controls, which limit dataset access to authorised personnel only.

- Secure annotation environments, where copying, downloading, or external data transfer is restricted.

- Regulatory alignment, such as GDPR compliance or SOC 2–aligned operational controls, where applicable.

- Defined data lifecycle policies, data usage, retention duration and deletion procedures.

Capacity Planning

AI projects often evolve in scope and volume. For that reason, a well-prepared annotation provider typically offers:

● Resource planning to assist in changing annotation volumes

● Consistent quality processes at scaling up periods

● Long-term support for continuous or recurring annotation needs

Communication Structure

Operational clarity contributes to efficient collaboration. AI buyers should review:

● Assigned project ownership, with responsibility for quality, timelines and issue resolution.

● Well-specified escalation channels and response times, including quality slippage or scope changes.

● Transparent pricing and scope management, where the process is documented to approve changes.

Conclusion

AI buyers can systematically evaluate any text annotation company based on quality management, domain alignment, data protection and governance practices. Using this framework supports consistent vendor comparison, clarifies expectations on delivery and accountability, along with ensuring that annotated text data remains reliable as AI initiatives evolve.

FAQs

1. What documentation should be requested during vendor evaluation?

You need to review annotation guidelines, quality reports, workflow SOPs and data security policies before choosing text annotation service providers.

2. Is a pilot project necessary before vendor selection?

Yes. Pilots using representative data provide practical validation of quality and domain alignment.

3. How should annotation quality be monitored after onboarding?

Through ongoing agreement metrics, error analysis reports and periodic quality audits.